In early 2025, Calastone released Decoding the Economics of Tokenisation, a white paper that laid out the economic case for tokenisation in asset management. The research drew directly from fund-level data, functional cost inputs, and performance expectations shared by senior decision-makers across the industry. It presented a clear and compelling narrative: tokenisation and distributed ledger technology (DLT) could unlock over $135 billion in operational efficiencies across the asset management industry, reduce operating costs by up to 23%, and shorten fund launch timelines by weeks.

Crucially, this upside comes against a backdrop of rising operational costs, with fund processing expenses projected to increase by an average of 32% over the next three years if current operating models remain unchanged. This mounting cost pressure further underscores why the case for transformation has never been more urgent.

The market response was immediate and affirming. But it also prompted a follow-up question from many industry leaders: what lies beneath those headline figures?

This paper answers that call. Drawing from the same dataset used in our original research, we now take a more detailed look at the operational realities beneath the promise of tokenisation. This “under the bonnet” view explores cost allocations, error volumes, functional inefficiencies, and investor dynamics that inform the broader business case.

A more detailed breakdown of the operating costs of a fund reveals the mechanics of why the industry is under pressure and where the greatest opportunities for transformation lie.

Using fund-level data from a $1.264 billion AUM benchmark fund, the picture becomes starkly clear: fund accounting alone consumes more than $2.1 million annually per fund, making it by far the largest single operational cost line. Other significant expenses include reconciliations at $837,495, transfer agency at $620,459, and settlements at $615,646.

The concentration of cost in the back office is overwhelming. 64% of total operating costs sit here, driven by post-trade processing, manual oversight, and duplicated processes. On top of that, the industry remains heavily labour-dependent: 47% of all costs are direct headcount, with the remaining 53% allocated to third-party providers.

This level of transparency paints a clear picture of a complex, fragile operating environment where manual processes are not only costly but compound risk and drag scalability.

It also explains why asset managers consistently cite fund accounting, transfer agency, and reconciliations as the top priorities for transformation. When 0.74% of AUM is lost to operational overhead, every basis point recovered is a competitive advantage.

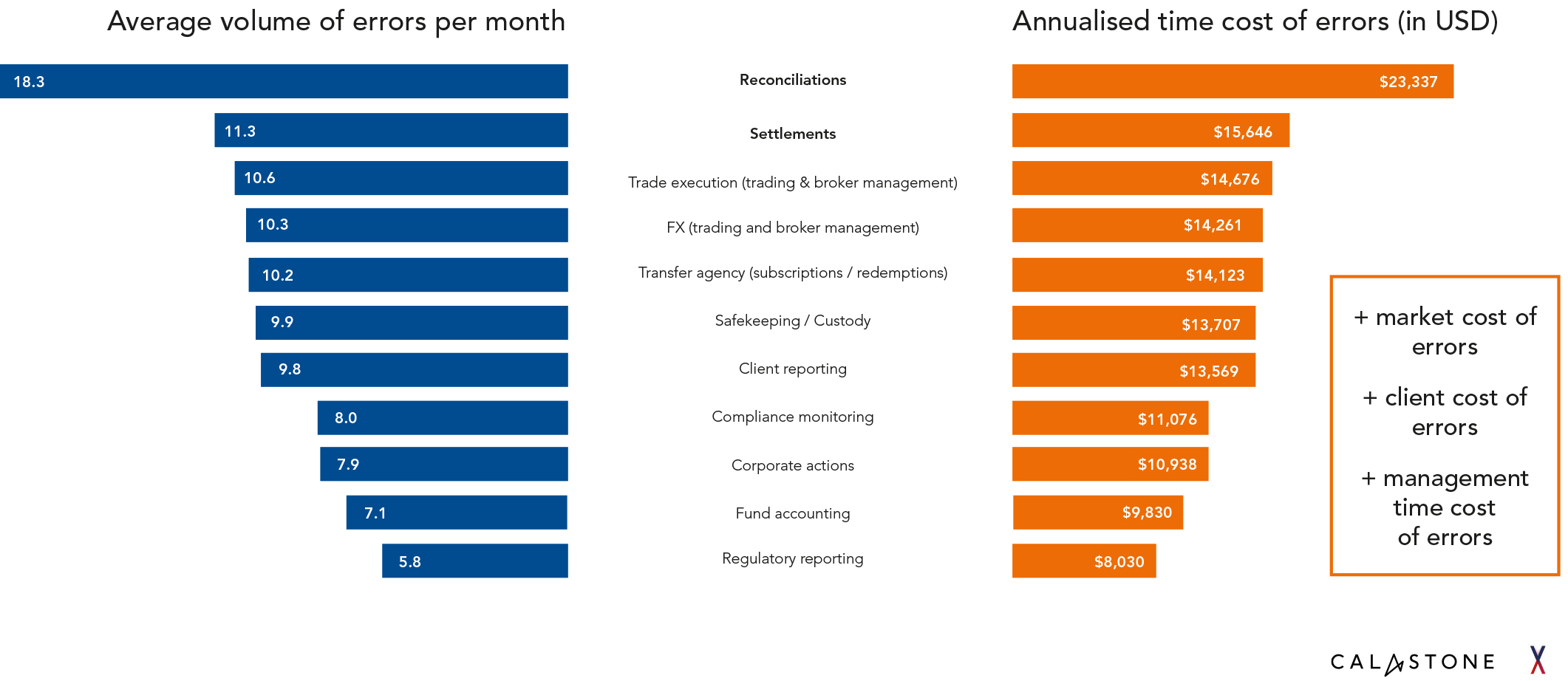

Errors, corrections, and rework are deeply embedded in today’s fund operating model, compounding operational friction.

Reconciliations stand out as the single largest source of operational error, averaging 18.3 mistakes every month. Each one carries not just the risk of delays or misstatements but an annualised time cost exceeding $25,000.

Other critical functions are similarly exposed. Trade execution averages 10.6 errors per month, each costing $14,676, while fund accounting suffers 7.1 errors monthly, with an average cost of $9,830 per mistake.

These are the structural byproduct of a fragmented operating model, one built on duplicated data, manual interventions, and reconciliation-heavy processes.

Tokenisation offers a way to eliminate entire classes of errors altogether. By replacing fragmented, asynchronous data flows with a shared, immutable ledger, tokenisation removes the need for reconciliations entirely, turning what was once a persistent operational tax into an operational advantage.

One of the most immediate questions raised after our paper was: “Where, exactly, are these savings coming from?”

Our data shows that the largest single source of savings is fund accounting, where firms project a 29.79% reduction in costs – the highest efficiency gain of any function in the operating model.

Close behind is transfer agency, with an expected 25.21% cost reduction, reflecting the heavy manual burden tied to subscriptions, redemptions, and shareholder record-keeping. Compliance monitoring and trade execution also show significant savings potential – at 23.75% and 21.88% respectively – driven by the elimination of redundant data checks, manual approvals, and fragmented reporting processes.

These figures demonstrate how tokenisation can streamline not just entire funds, but individual functional processes within them. It’s in these line items, where high-volume tasks and legacy interfaces collide, that the clearest economic upside exists.

Among these, transfer agency consistently emerged as one of the most pressing priorities for transformation. Every respondent in our survey ranked it highly, reflecting the operational burden of fragmented shareholder servicing, record management, and reconciliation-heavy processes. But while transfer agency may be the visible pressure point, the real opportunity lies in rethinking the full post-trade operating model, not just digitising one part of it.

Tokenisation enables a systemic upgrade, where processes like TA can be redesigned not in isolation, but as part of an integrated, ledger-native architecture.

The motivations behind transformation vary by function. While cost is a consistent factor, many areas are also being pushed forward by regulatory change, operational risk, and distributor requirements. Understanding these layered drivers is key to building the right tokenisation roadmap.

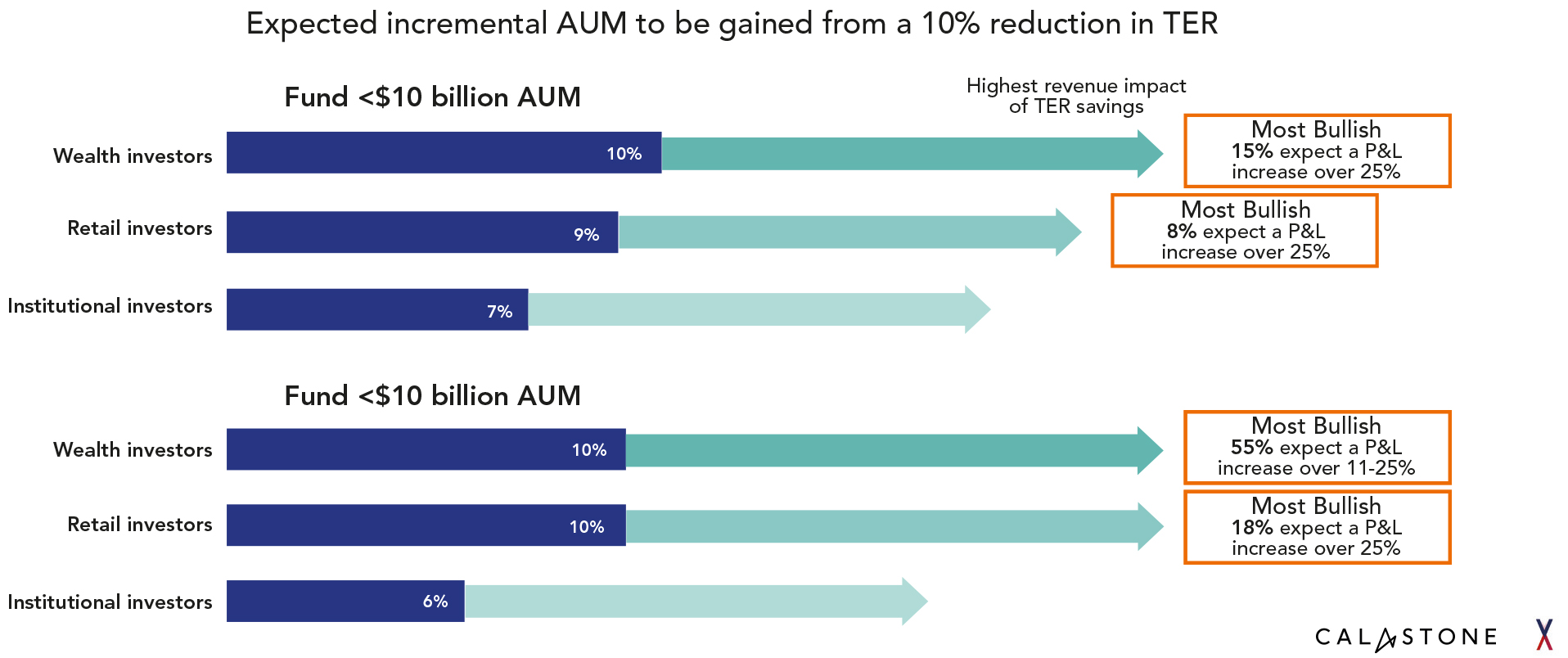

One of the most powerful insights from the data is the link between reduced fund operating costs and the ability to lower Total Expense Ratios (TERs) and what happens when that pricing advantage hits the market. This is where tokenisation shifts from an operational efficiency play to a growth engine.

The demand-side response is clear. For larger funds (those with over $10 billion in AUM), managers expect that a 10% reduction in TER drives an average 10% increase in AUM from both wealth and retail investors, and a 6% increase from institutional clients. Beneath those averages, some firms are even more bullish: 55% of managers expect a double-digit AUM uplift (between 11% and 25%) from wealth investors, while 18% predict AUM growth exceeding 25% from retail channels.

Tokenisation changes the growth equation. Reducing operational costs creates the freedom to cut fees and that unlocks access to price-sensitive segments like wealth and retail. Operational transformation feeds directly into commercial advantage.

In short, lower costs beget lower fees, which beget greater scale. The firms that move first to capture this advantage stand to take disproportionate market share, while those that wait risk watching that advantage compound in the hands of competitors.

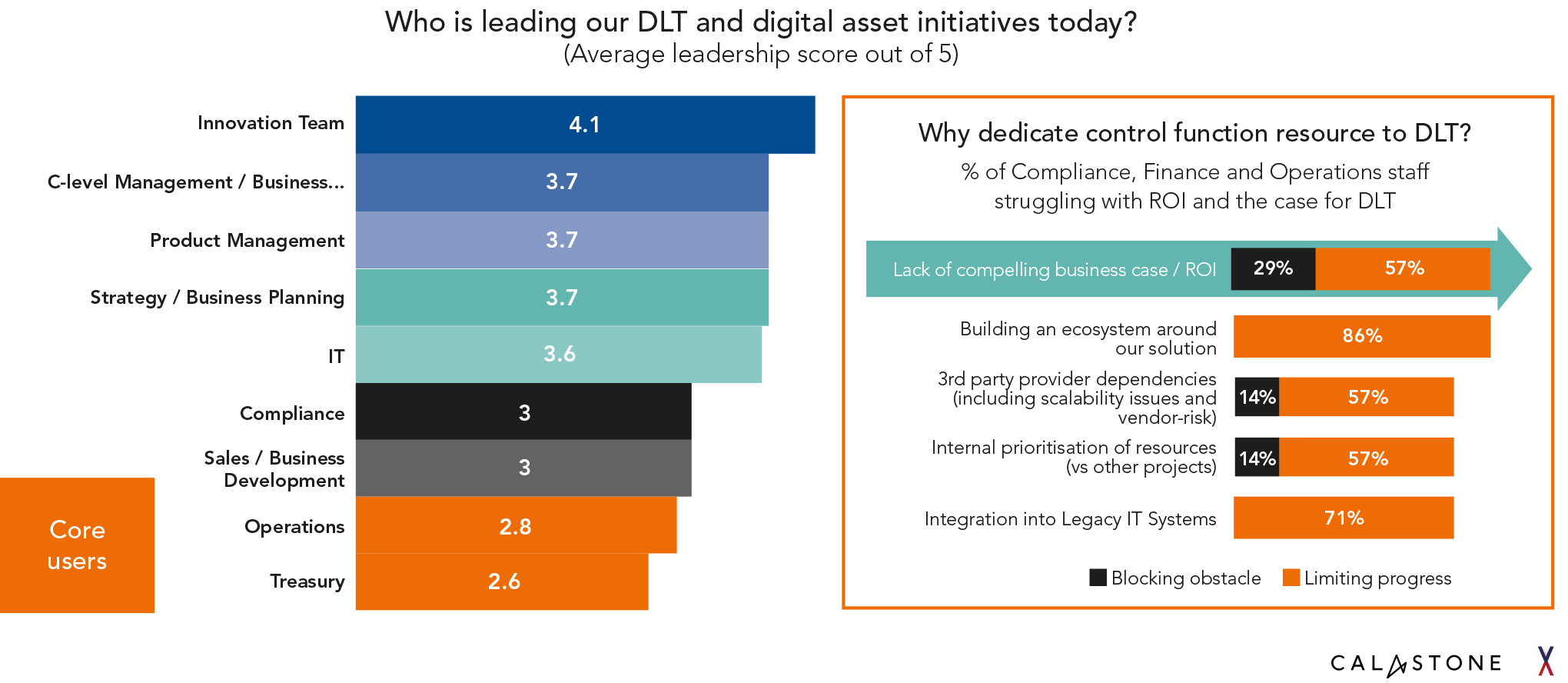

One of the most revealing insights from the dataset is how responsibility for tokenisation initiatives is distributed within organisations. Innovation teams score highest (4.1/5) for driving DLT projects, while core operational functions like Compliance (3.0), Finance (2.6), and Operations (2.8) are less commonly in the lead.

This isn’t necessarily misalignment – it makes sense for innovation teams to pioneer emerging technologies. But the deeper issue is what follows: 86% of leaders in Finance, Ops, and Compliance report struggling to fully understand the ROI case for tokenisation. These are the very teams that will ultimately execute change and their uncertainty presents a real barrier to scaling from pilot to production.

This disconnect matters. Proofs of concept may originate in innovation functions, but long-term value will be captured only if the operational core is aligned on the case for transformation. That means equipping delivery teams with clearer economics, practical examples, and measurable outcomes.

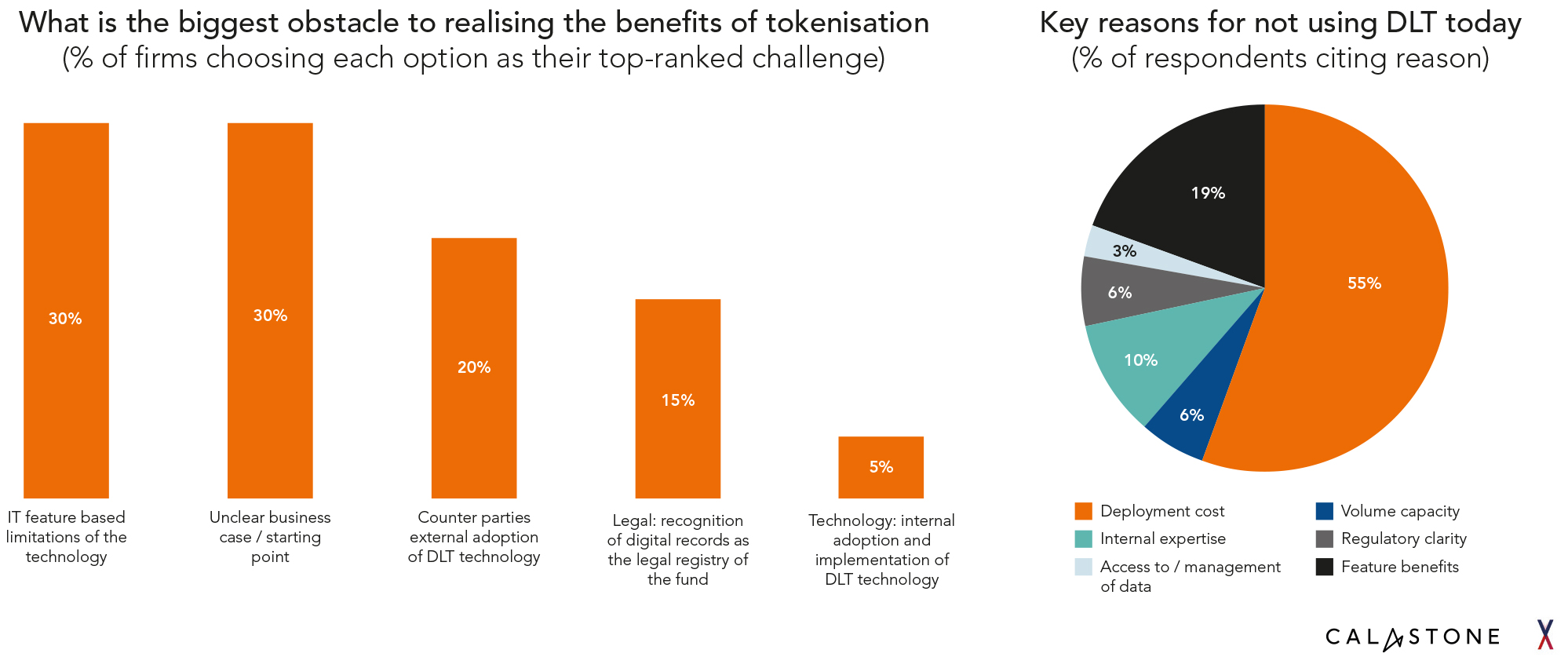

When asked about the barriers to adoption, the wider survey pointed to both internal and external frictions:

Tokenisation can’t be championed by one department alone. Its success depends on cross-functional ownership — and on making the upside real for those charged with delivering it.

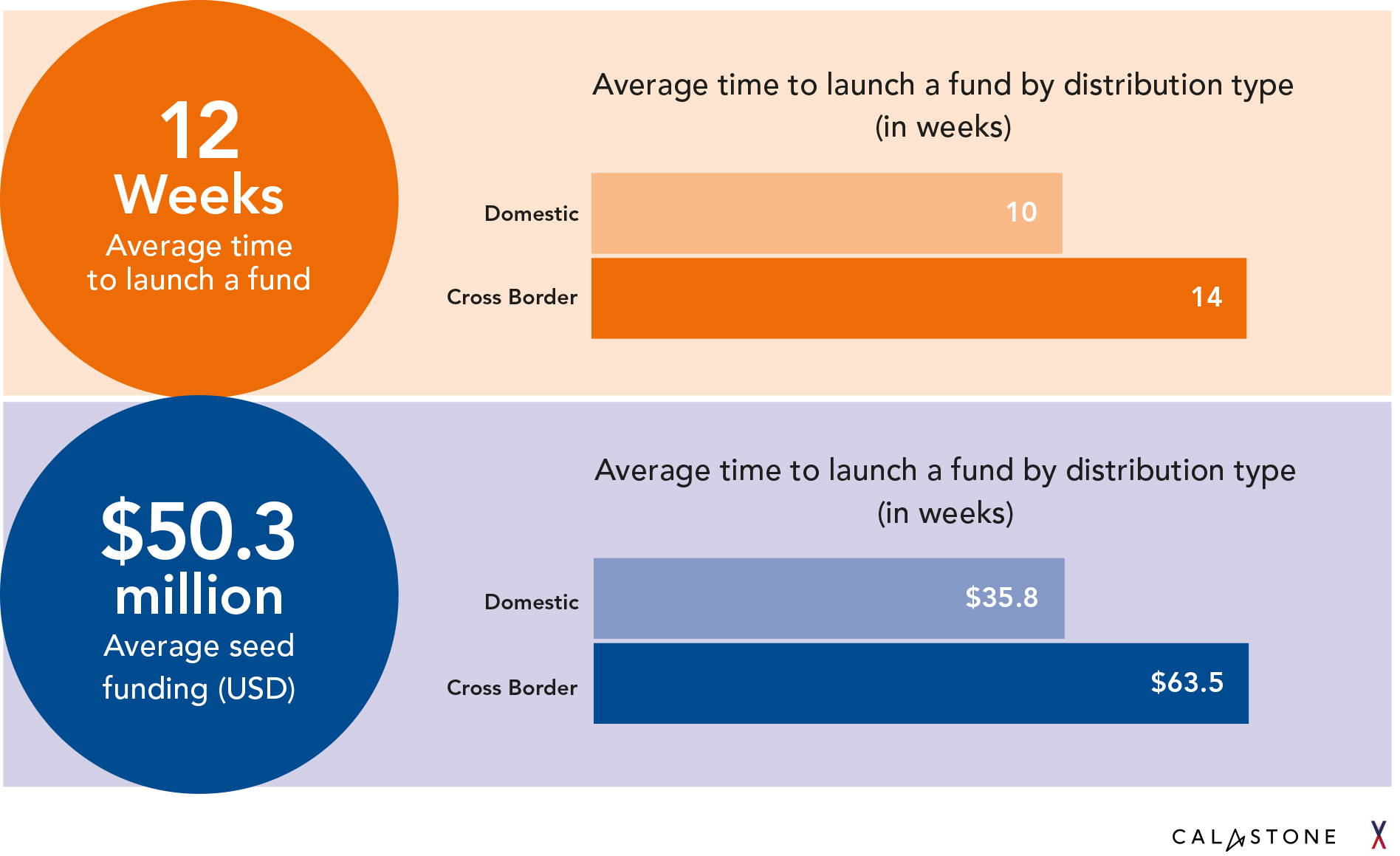

We previously noted that tokenisation could cut the time to launch a new fund from 12 to 9 weeks, and reduce seed funding requirements from $50.3 million to $38.1 million. But those headline figures represent a blended industry average. This data reveals a more nuanced reality, shaped both by the type of fund being launched and by the ambition of individual firms.

At the firm level, expectations vary significantly. While the majority model a 3-week acceleration in launch timelines, some expect to cut that by more than 50% – shaving 6 weeks or more from the process. Similarly, although the median reduction in seed funding is 24%, nearly 40% of managers anticipate cuts between 26% and 50%, delivering potential savings of up to $25.2 million. These outlier expectations reflect a cohort of firms prepared to fundamentally redesign processes rather than simply automate existing ones.

There is also a sharp divergence based on fund type. Domestic funds already start from a lower baseline, typically taking 10 weeks to launch with seed funding of $35.8 million. Tokenisation reduces that to 8 weeks and $28.1 million. In contrast, cross-border funds face far greater friction, requiring 14 weeks to launch and $63.5 million in seed capital, nearly 78% more expensive to seed than domestic counterparts. Even after tokenisation, cross-border launches remain more complex but still benefit meaningfully, with launch timelines cut to 10 weeks and seed requirements reduced to $48.3 million.

This distributional view matters. It highlights that tokenisation doesn’t offer a one-size-fits-all outcome, the scale of benefit depends on both the operational environment and how aggressively a firm is willing to embrace transformation.

For cross-border funds in particular, the payoff is clear. The operational drag of multi-jurisdictional compliance, duplicated set-up tasks, and fragmented infrastructure is what drives the higher cost base. By replacing that fragmented infrastructure with distributed ledgers – a single source of truth for ownership, transactions, and compliance – tokenisation offers a direct route to simplifying cross-border fund launches, collapsing complexity, and materially lowering the barriers to scale.

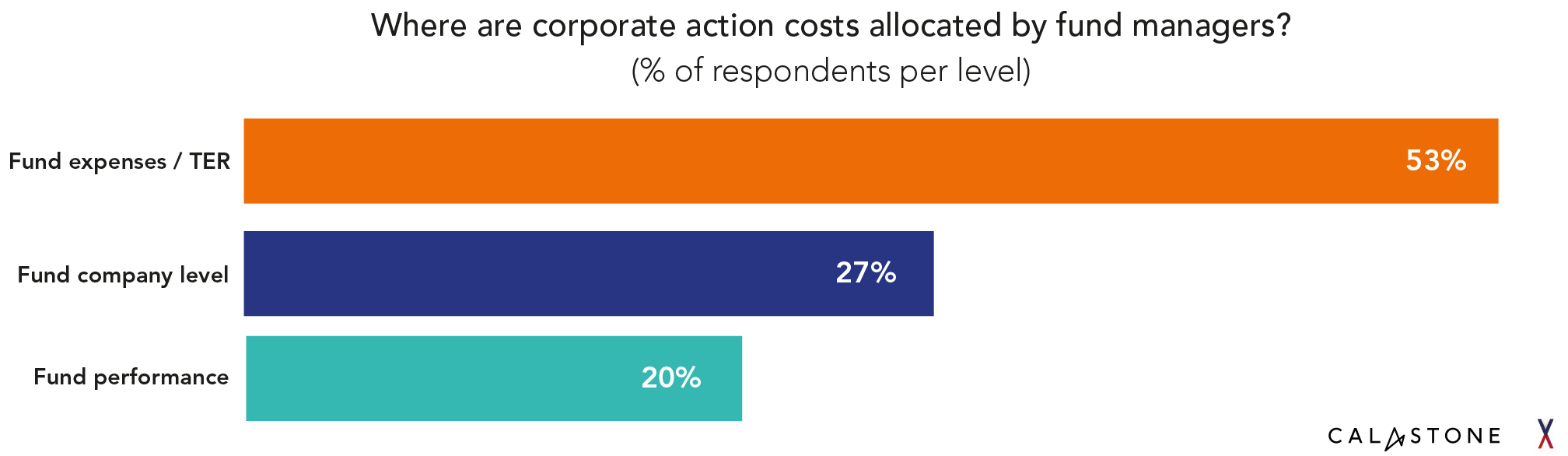

One finding that has yet to enter public discussion is the treatment of corporate action costs and how inconsistently the industry manages them. The data shows a market with no clear standard. A slim majority – 53% of managers – pass these costs directly into the TER, embedding them in the fees charged to investors. Another 27% absorb them at the fund company level, treating them as an operational overhead. Meanwhile, 20% assign them to fund performance, meaning the costs directly impact investor returns but remain largely invisible.

This inconsistency matters. Corporate actions represent a significant operational load, especially when managed across multiple intermediaries and fragmented systems. Yet there is no universally accepted framework for how these costs are allocated, and end investors are left with very limited visibility into how, or even where, these costs impact them.

It’s a problem hiding in plain sight and one that tokenisation is uniquely positioned to solve. A distributed ledger approach enables a far more automated and transparent model, with smart contracts driving real-time execution, reducing manual intervention, and simplifying cost allocation.

Corporate actions may be operational plumbing, but in the tokenised future, they are a prime candidate for innovation.

Tokenisation has often been discussed in visionary terms: as a revolution in market structure, investor experience, or product design. But as this paper shows, the real story lives in the detail.

The underlying cost structure of fund operations is fragmented, manual, and increasingly expensive. Errors are frequent and costly. Internal teams are misaligned. Fund launches are unnecessarily slow, especially cross-border. And key activities like corporate actions remain opaque.

Each of these issues becomes not just a pain point but an opportunity, one that tokenisation can uniquely address.

Zooming out, these operational insights reinforce the broader conclusion of our original research: that tokenisation could deliver over $135 billion in total economic upside across the industry. But that opportunity is not guaranteed – it will only be realised by firms willing to look inward, to confront the detailed processes, functions, and costs explored in this paper, and to start their transformation at the operational core.

The data also surfaces a critical truth: this is a defence against an operating model that is only getting more expensive. Fund processing costs are forecast to climb by 32% over the next three years if current models persist. Firms that delay risk locking in a higher cost base – one that becomes exponentially harder to unwind. At the same time, the window for early-mover advantage is wide open.

Our belief remains that tokenisation will not be an overnight change but an incremental transformation. But this data shows clearly where that transformation can start, with measurable, fund-level metrics that make the business case impossible to ignore. The firms that act now will define the benchmarks that everyone else will eventually have to follow.